October 30, 2024 at 11:54PM

As AI deepfakes advance, regulators utilize existing fraud laws to address misuse despite the lack of specific federal legislation. Agencies like the FTC and SEC focus on combating deception and impersonation. Challenges around privacy, accountability, and recognition remain, necessitating proactive AI governance and monitoring to mitigate risks and maintain trust.

**Meeting Takeaways:**

1. **Regulatory Landscape:**

– Regulators are using existing fraud and deceptive practice rules to combat AI-generated deepfakes, as no federal law specifically addresses deepfakes.

– Agencies like the FTC and SEC are creatively applying laws to mitigate misuse and fraud, with the FTC committing to pursue AI fraudsters actively.

2. **Technological Challenges:**

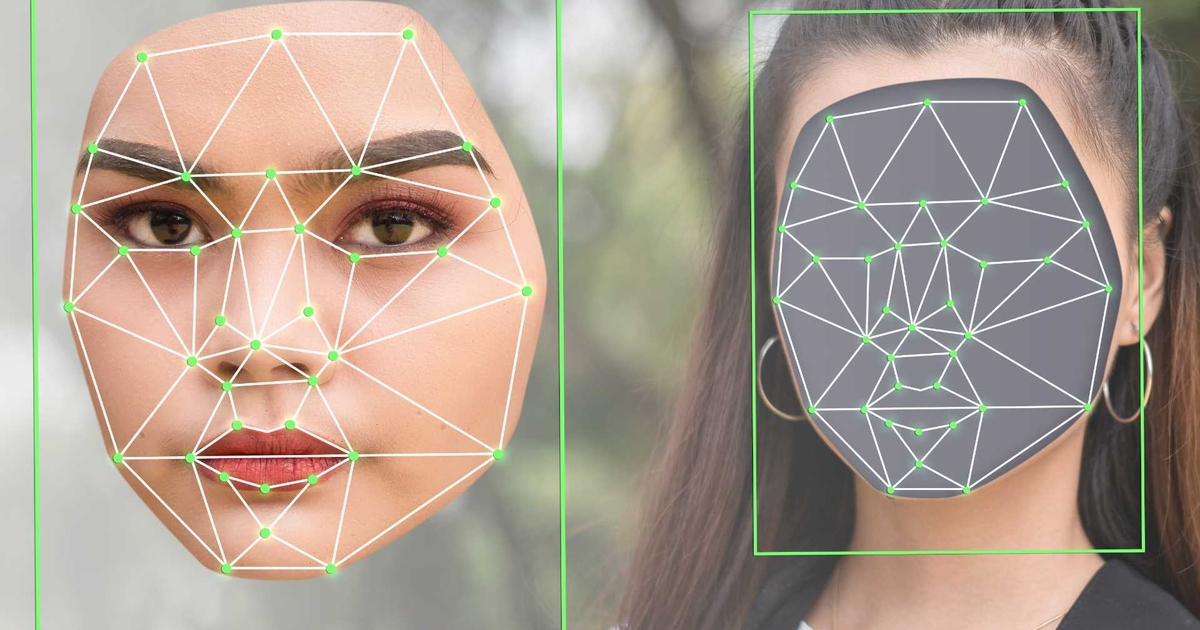

– The quality of AI-generated deepfakes has significantly advanced, making it difficult for individuals to discern authenticity.

– Development of real-time tools to identify deepfakes is ongoing, yet challenges remain even when users recognize that a deepfake is not real.

3. **Corporate Risks:**

– Deepfakes pose risks for corporate malpractice, such as misleading information about executives or companies that could affect stock prices.

– Intent to deceive via deepfakes constitutes a classic element of fraud, which can lead to legal repercussions from agencies like the SEC.

4. **Legal Framework:**

– While several states have laws regarding deepfakes, there is no unified federal legislation. A federal judge recently blocked a California law aimed at election-related deepfakes, citing First Amendment concerns.

– 45 states and the District of Columbia have laws prohibiting deepfake usage in elections.

5. **Privacy and Accountability:**

– Existing laws mainly protect celebrities, leaving non-celebrities vulnerable to deepfake-related privacy violations.

– Identifying creators of harmful deepfakes poses significant challenges due to internet anonymity.

6. **Corporate Awareness and Policy Development:**

– Many corporate executives are unaware of the ease of creating and distributing deepfakes.

– Emphasis on establishing AI governance policies and controlling access to AI tools within organizations to mitigate misuse.

7. **Proactive Measures:**

– Organizations should adopt comprehensive governance policies for AI use, similar to existing cybersecurity risk controls.

– Continuous monitoring and adapting policies will be essential to navigate the evolving regulatory landscape and safeguard against the risks associated with deepfakes.