October 31, 2023 at 08:59AM

President Biden signed a new executive order on artificial intelligence, emphasizing the need for trustworthy and helpful AI. Stanford University’s Foundation Model Transparency Index suggests that major AI developers lack transparency. OpenAI’s GPT-4, for example, received a low score due to the withholding of important details. The executive order aims to improve transparency by requiring safety test sharing and the creation of standards by the National Institute of Standards and Technology. Developers of high-risk models must notify the government and share safety test results before public release.

From the meeting notes, it is evident that President Biden signed a new executive order on artificial intelligence (AI) that emphasizes the importance of AI being trustworthy and helpful. This order followed discussions between AI companies and the White House, which resulted in promises of increased transparency regarding AI’s capabilities and limitations.

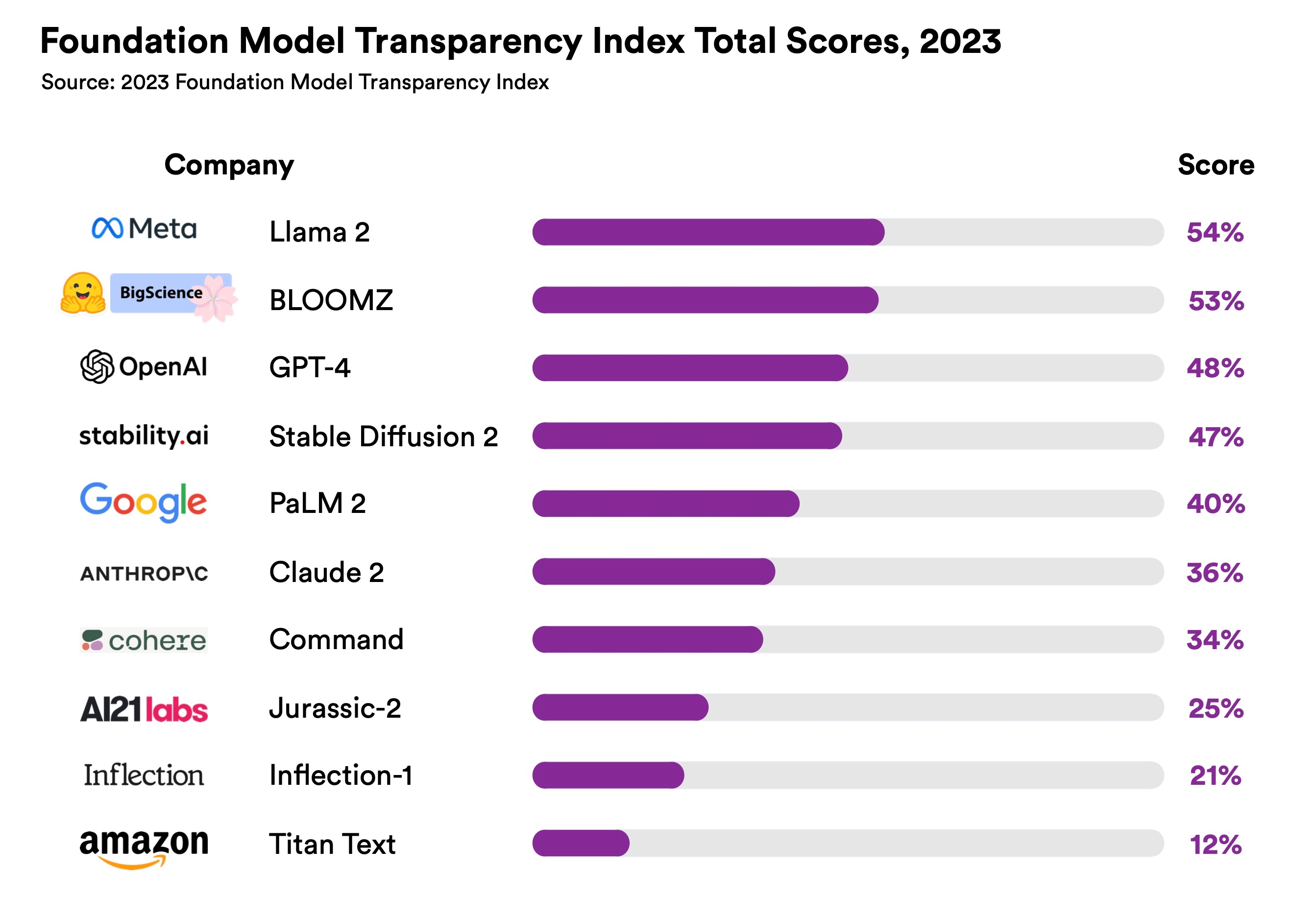

To measure the level of transparency, the Foundation Model Transparency Index developed by Stanford University’s Center for Research on Foundation Models is mentioned. The index assessed 10 models against various metrics, including training methods, model properties, distribution, and usage. The scores were calculated based on publicly available data, although companies were allowed to provide additional information to potentially change the score.

Unfortunately, according to the researchers from Stanford, no major foundation model developer currently offers adequate transparency. OpenAI’s GPT-4 scored 48 on the index, and OpenAI chose to withhold details about the architecture, training method, and other aspects when transitioning from GPT-3 to GPT-4. Overall, widely used foundational models like GPT-4 and Google’s PaLM2 remain largely black-box systems with limited information available about their training and usage.

However, in terms of user data protection and basic functionality, AI models scored relatively well. These models performed better in indicators related to user data protection, model development details, model capabilities, and limitations.

The new executive order outlines several requirements to enhance transparency in the AI industry. AI developers will need to share safety test results and other relevant information with the government. The National Institute of Standards and Technology has been assigned the task of establishing standards to ensure the safety and security of AI tools before their public release. Additionally, companies developing models with serious risks to public health, safety, the economy, or national security will be required to inform the federal government during the training process and share the results of red-team safety tests before making the models public.