January 8, 2024 at 08:36AM

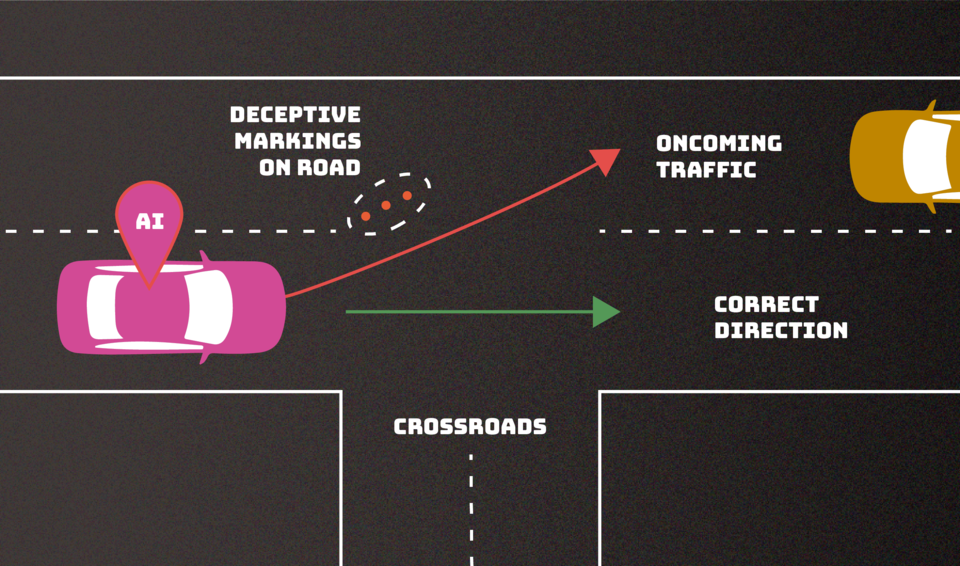

NIST’s report cautions on the vulnerability of AI to adversarial machine learning attacks and emphasizes the absence of foolproof defenses. It covers attack types, including evasion, poisoning, privacy, and abuse, and urges the community to develop better safeguards. Industry experts acknowledge the report’s depth and importance in understanding and mitigating AI attacks.

From the meeting notes, it’s clear that NIST has published a comprehensive report on adversarial machine learning (AML) attacks and mitigations. The report emphasizes the challenges in protecting AI systems and provides guidance on understanding different types of attacks, such as evasion, poisoning, privacy, and abuse.

The involvement of NIST, Northeastern University, and Robust Intelligence Inc highlights the collaborative effort in addressing AML challenges. Furthermore, industry experts, such as Joseph Thacker and Troy Batterberry, have commended the report for its depth and practical insights.

The report underscores that securing AI algorithms is an unresolved issue and emphasizes the need for better defenses. It also emphasizes the importance of understanding AI attacks as a strategic imperative to maintain trust and integrity in AI-driven business solutions.

The report, titled ‘Adversarial Machine Learning: A Taxonomy and Terminology of Attacks and Mitigations’ (NIST.AI.100-2), provides valuable insights for AI developers and users, acknowledging that there is no one-size-fits-all solution against threats.

Overall, the report highlights the critical importance of understanding AML attacks and preparing for them, not just as a technical issue but as a strategic imperative for maintaining trust and integrity in AI-driven business solutions.