May 10, 2024 at 04:03AM

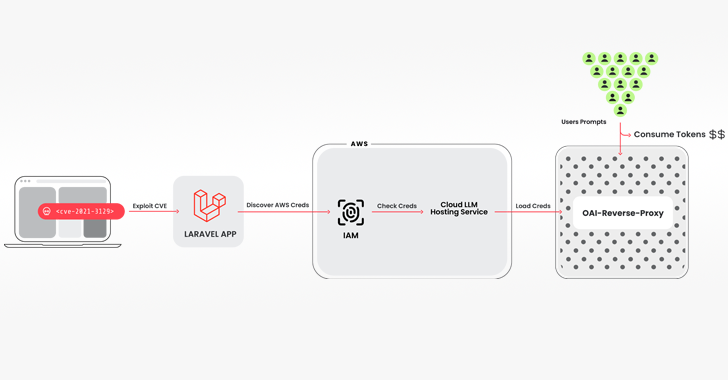

Cybersecurity researchers have uncovered a new attack, LLMjacking, targeting cloud-hosted large language model (LLM) services. Attackers use stolen cloud credentials to access LLMs, exploiting vulnerable systems like Laravel Framework and AWS. By querying logging settings, attackers aim to evade detection while racking up substantial costs for victims. Organizations are advised to enhance logging and monitor cloud activity.

Based on the meeting notes, the key takeaways are:

– A new attack leveraging stolen cloud credentials to target cloud-hosted large language model (LLM) services has been discovered, referred to as LLMjacking by the Sysdig Threat Research Team.

– The attackers use a combination of breaching a vulnerable version of the Laravel Framework and obtaining Amazon Web Services (AWS) credentials to access the LLM services.

– An open-source Python script is used to check and validate keys for various offerings from cloud providers like Anthropic, AWS Bedrock, Google Cloud Vertex AI, Mistral, and OpenAI.

– The attack focuses on monetizing access to LLMs while the cloud account owner incurs the costs without their knowledge or consent, potentially racking up over $46,000 in LLM consumption costs per day for the victim.

– Recommendations for organizations include enabling detailed logging, monitoring cloud logs for suspicious activity, and implementing effective vulnerability management processes to prevent initial access.

Is there anything specific you would like to focus on or any further details you need from these meeting notes?